Author Archive

Psst – Wanna Buy a Dashboard?

Have I got a deal for you. This Dashboard is specifically tailored to people just like you, with your pressing business issues, concerns, information needs, and analytical and reporting requirements.

Our Highly Paid Consultants created this Dashboard based on their Highly Remunerative Engagements with Executives and Business Leaders in your industry, including some of your partners and competitors.

We’re confident that the money they paid us was more than adequate to cover the cost of building this Dashboard in their environments and we’re pleased to be able to offer you the opportunity to leverage our Highly Paid Experience in their employ and acquire a Dashboard Just Like Theirs that will provide Strategic Business Value to you in your Executive Capacity.

By leveraging our Industry Leading Experience and employing our Standard Methodological Process via our Institutionalized Templated Project Plans we will be able to install your Dashboard in short order, with only the wiring up of the complex, impenetrable data backplane to your organization’s unique mix of custom operational systems left to do.

And we have just the staff of regimented, organized, fully industrialized workers to perform this Too Complex For You custom wiring, with no need for your people to get their hands dirty. At the Low, Low price of How Much Do You Have To Spend?

The best part of all this is that your new dashboard is guaranteed to be the envy of Boardrooms and Executive Suites across your industry. We leverage all the full spectrum of marketware best practices in designing the Incredibly Attractive Visualizations that are guaranteed to produce the Wow! factor that only the highest-placed executives can truly appreciate.

You will have

- Archive Quality Photo Realistic Bulb Thermometers!

- Dial Gauges!

licensed from the leading Italian Automotive Design Studios! - Glistening, dripping wet-look 4D Lozenge Torus Charts!

skinned with Authentic Southwest Arizona Diamondback scale patterns! - Fully Immersive Holodeck Multi Dimensional Data Flyby Zones!

- Many more too numerous to mention!

Step right up.

Step right up.

A note on Tableau’s place in BI history.

I began this note as a response to Eric Paul McNeil, Sr’s comment on my earlier “BI Bis is dead…” post, here, in which he notes that history repeats itself, relating the emergence of Tableau to Client/Server RDBMS’ effect in the mainframe-dominated 80s.

I agree. History does repeat itself, although I see a different dimension, one more directly tied to business data analysis, the essence of BI.

Back when mainframes ruled business computing data processing shops controlled access to businesses’ data, doling it out in dribs and drabs. Sometimes grudgingly and only under duress, sometimes simply because the barriers to delivery were high, with lots of friction in the gears. Designing and writing COBOL to access and prepare data for green bar printing or terminal display was laborious and difficult.

Fast forward to the present and the modern Enterprise Data Warehouse is today’s data processing shop. Monolithic, gigantic, all-swallowing, the data goes in but business information has a very hard time getting out, for largely the same reasons: the technology is enormous, and enormously complex, requiring legions of specialized technologists to prime the pumps, control the valves, man the switches, and receive their sacrifices. Size and Complexity are universally accompanied by their partners Command and Control. Simply put, the engagement of the resources and personnel required to get anything out of modern Big BI data warehouse-based systems demands the imposition of processes and procedures nominally in place to ensure manageability and accountability, but pretty much guaranteeing that delivering the information required by the business stakeholders takes a back seat. Sad but true. As a very wise man once said: “Beware the perils of process.”

The parallels continue. In the way-back some very clever fellows realized that there was a better way. Instead of mechanically writing the same structural COBOL code to munge data into the row-oriented, nested sorting with aggregations reports in demand, they abstracted the common operations into English, resulting in the ability to write this:

TABLE FILE EMPLOYEE

SUM SALARY

BY DEPARTMENT

END

with the expected results. This was the birth of the 4GL. Ramis begat FOCUS, with NOMAD in the wings. All was good in the world. Businesses could get immediate, direct access to the information in their data without needing to offer the appropriate sacrifices to the DP high priests.

Then came the dark years. The clarity was lost, the ability for business people to directly access their own business data diminished as the data became locked first in impossible-to-comprehend atomic data tables (sometimes even relational), and then in an irony too delicious for words, the massive dimensionally modeled monsters that were supposed to save the day but instead imposed themselves between the business people and their data.

And now we have the emergence of Tableau which, along with its cousins, delivers the same paradigm-changing ability to short-circuit the data guardians and establish the intimate connection between business people and their data.

-Their- data.

Once again, as BI professionals, and I consider myself lucky to have been one, albeit under different names, for 25 years, we have the opportunity to observe and honor one of the maxims of our profession:

All BI is local.

In the five years I’ve been using Tableau I’ve been watching it, and wondering what trajectory it would follow. Would it preserve its essential beauty and grace as it evolved, or would it choose a path that led it into darkness, like its conceptual ancestors. I watched FOCUS stumble and lose its way, and haven’t wanted to see it happen to Tableau. So far, so good. Tableau has preserved its integrity, its nature, even as it has grown and adopted new features. There have been a few rough edges but the company seems truly committed to continuing to create and deliver the absolute best product possible. One that helps, supports, and lives to serve as unobtrusively as possible instead of demanding that people make concessions to the machinery.

Sometimes history knows when enough is enough.

Mapping Tableau Workbooks

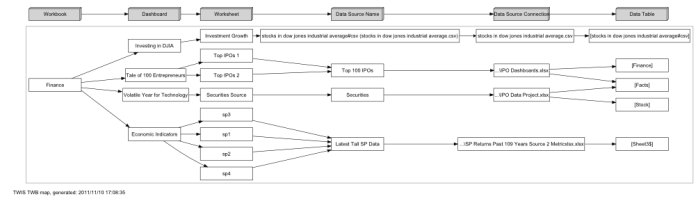

As Tableau workbooks grow it becomes difficult to keep track of their various elements—e.g. Dashboards, Worksheets, and Data Sources—and the relationships between them.

I’ve found that mapping out these elements is helpful in identifying and understanding them, particularly with workbooks that I didn’t create. Benefits include understanding complex relationships that aren’t surfaced well in the Tableau UI, such as the connections between worksheets and data sources, including the scope of global filters; spotting hidden worksheets; and identifying anomalies like unused data sources, dashboards that reference no worksheets, even orphaned worksheets.

When I began working with Tableau five years ago I started out hand-drawing maps as I worked in the workbooks, recording the parts as I encountered them.

The discovery that Tableau workbooks are XML(ish) was a big step forward, making it possible to use straightforward text handling techniques to identify the parts, and some of the obvious relationships between them. It was straightforward to use grep and awk, sometimes a dash or perl, to help tease out the information. Changing a workbook’s file extension to xml and viewing it in an XML-aware tool was really helpful-Firefox is very useful for this.

Still, there were difficulties. Some of the relationships are not straightforward, and require deeper inspection and interpretation.

So I built the map-making ability into TWIS, the app I wrote to inventory Tableau workbooks, and the results are pretty satisfying. The map of the Tableau Finance sample workbook is shown rendered in PNG; clicking on it will display the PDF rendering, with much better resolution and suitable for printing. I’ve also posted links to a wide variety of Tableau workbook maps here. For more information on TWIS, its home page is here.

Orphaned Worksheets

This link is to the PDF of the Tableau Sample Workbook Science.twb (v6.0). Interestingly, it shows the existence of several worksheets that can’t be found using Tableau. Orphaned worksheets, like these, were at one time included in a Dashboard, and left stranded when the last Dashboard that referenced them was deleted. As far as I know, this is the only presentation of orphaned worksheets. As of v6.1 Tableau identifies this last-Dashboard-reference to a worksheet when deleting the last Dashboard and asks whether or not it should proceed, making the ongoing orphaning of worksheets much less likely. As of this writing it’s unclear whether or how legacy orphaned worksheets will be handled in Tableau v6.1 and beyond, but at least there’s now a way to identify them.

I’ll be posting the results of a more thorough investigation into orphaned workbooks soon.

Big BI is dead. But it’ll twitch for awhile.

The end came last week in Las Vegas at the annual Tableau Customer Conference.

Big BI put up a valiant struggle, but it’s been unwell for some time, sputtering along, living on past glories real and imagined.

Its passing was inevitable. Big, bloated, and overripe, it never lived up to its promise of being the path to data enlightenment. Although its nominal goals were good and proper, in practice it failed to deliver. Much has been written on Big BI’s problems, including here, here, and here.

Big BI flourished, and then held on in spite of its flaws. Partly through inertia—a lot of money and time had been spent over its two decades of dominance. Partly through pervasiveness—Big BI’s proponents have been hugely successful at promoting it as the one true way. Partly through the absence of the disruptive technology that would upend the BI universe.

Big BI is brain-dead, but support systems are keeping the corpse warm.

Like all empires on the wane, its inhabitants,and sponsors haven’t realized it yet. Or, cynically, those who have are milking it for what they can get while the getting is still good. Like many empires, its demise comes not with a big bang, but with a nearly silent revolution that upends the established order—even as the Big BI promoters and beneficiaries are flush, fat, and happy their base of influence and position, wealth and power, has eroded away, leaving them dining on memories.

Big BI’s fundamental premise was always deeply flawed, erroneous when it wasn’t disingenuous or worse. The paradigm held that the only approach to achieving Business Intelligence within an organization was through the consolidation of the enterprise’s business data into data warehouses from which a comprehensive, all-encompassing single version of the truth could be achieved.

The only points of differentiation and discussion in the Big BI universe were squabbles about ultimately minor aspects of the core reality. Data Warehouses vs Data Marts. Inmon vs Kimball (Google “Inmon Kimball”). Dimensionally modeled analytical databases are relational vs no they’re not. And so on and so forth and such like.

The baseline concept remained the same: Business Intelligence is achieved by collecting, cleansing, transforming and loading business information into the integrated, homogenized, consolidated data store (Mart or Warehouse), where it then, and only then, can be fronted by a large, complex, complicated “Enterprise BI Platform” that provides a business semantic facade for the dimensional data and is the only channel that can be used to code up, create, and deliver reports, graphs, charts, dashboards, strategy maps, and the like to the business people who need to understand the state of their area of responsibility and make data-based decisions.

The overarching goal is completely correct: delivering information (intelligence) to the real human people who need it. But the reality is that Big BI has abjectly failed to deliver. With an eye to history, and another to the evolution of technology the possible end of Big BI has been in sight for some time. The history of BI is deep and rich, encompassing much more than Big BI. A brief history (mine) is here.

What happened? Why now? Why Tableau?

A number of years ago novel products appeared, sharing the concept that data access and analysis should be easy and straightforward, that people should be able to conduct meaningful, highly valuable investigations into their data with a minimum of fuss and bother.

Tableau was the best of these products, squarely aimed at making it as simple and straightforward as possible to visualize data. This simple principle is the lever that has ultimately toppled Big BI by removing the barriers other technologies impose between real human people and their data, and the friction they impose, making data access and analysis a chore instead of an invigorating and rewarding experience.

But Big BI was well established. There were institutes, academics, huge vendors to sell you their databases and Enterprise BI platforms, and huge consultancies to help you wrangle the technology.

And there was a whole generation indoctrinated in the One True Way that held as articles of faith that there is only One Version Of The Truth, that only Enterprise-consolidated data carries real business value, that business data is too important to be left to its owners: like Dickensian orphans it needs to be institutionalized, homogenized, and cleaned up before it can be seen in public.

Tableau has a different philosophy. Data is in and of itself valuable. Data analysis is the right and privilege of its owners. Data analysis should be fast, easy, straightforward, and rewarding. There is truth in all data, and all those truths are valuable.

Still, the Big BI advocates found ways to block the radical upstarts, the data democratizers. “But what about consistency across units?” “How can we trust that two (or more) people’s analyses are equivalent?”

And the most damning of all: “Pretty pictures are nice toys, but we need the big, brawny, he-man industrial controls to ensure that we at the top know that we’re getting the straight poop.” There’s so much wrong with this last one that it will take several essays to unwind it. (to be continued)

Distilled to their essence, the objections of the Big BI proponents to the use of Tableau as a valuable, meaningful, essential tool for helping achieve the real goal of getting the essential information out of business data into the minds of those who need it as fast as possible, as well as possible, are these:

Point: There must be single-point, trusted sources of the data that’s used to make critical business decisions.

Subtext: Local data analysis is all fine and good but until we can have point control over our data we’re not going to believe anything.

Subsubtext: This is an erroneous perspective, and ultimately harmful to an organization, but that’s another story, the reality is that even as misguided as it is in the entire context, there is a need to have single-source authoritative data.

Tableau’s response: the upcoming Tableau version 7 provides the ability to publish managed, authoritative data sources to the Tableau Server, available for use by all Tableau products. This feature provides the single trusted data source capability required for Enterprise data confidence.

Point: There must be absolute confidence that similarly named analyses, e.g. Profit, are in fact comparable.

Subtext: As long as people have the opportunity to conduct their own data analysis we suspect that there will be shenanigans going on and we need a way to make sure that things are what they claim to be.

The Tableau reality: Tableau’s data manipulations are, if not transparent, not deliberately obfuscated. Every transformation, including calculations, e.g. Profit, is visible within the Tableau workbook that it’s part of. There are two ways to ensure that multiple analyses are conveying the same data in the same way: the workbooks containing the analyses can be manually inspected; or the workbooks can be inventoried with a tool designed for the purpose, the results of which is an database of the elements in the workbooks, through to and including field calculations, making the cross-comparisons a simple matter of data analysis with Tableau.

Tableau does not itself possess the self-inventorying ability. There is, however, such a tool available: the Tableau Workbook Inventory System (TWIS), available from Better BI, details are available here.

So Big BI’s day is done. The interesting part will be watching how long will it take before it’s grip on business data analysis—Business Intelligence—loosens and enterprises of all types and sizes really begin to garner the benefits of being able to hear the stories their data has to tell.

BI RAD – Business Intelligence Rapid Analytics Delivery

A fundamental virtue in BI is the delivery of value early, often and constantly.

Business Intelligence—Rapid Analytics Delivery (BI RAD) is the practice of creating high quality analytics as quickly, efficiently, and effectively as possible. Done properly, it’s possible to develop the analytics hand-in-hand with the business decision makers in hours.

BI RAD requires the combination of tools that permit easy access to the business data, are highly effective and usable in the exploratory model of analysis-browsing through the data looking for interesting patterns and anomalies, and then persisting meaningful analyses as analytics.

BI RAD emerged as a viable practice over twenty years ago with the first generations of specialized reporting tools that were much more nimble and effective than the COBOL report generation coming from DP shops. I worked extensively with FOCUS, the original 4GL, first at JCPenney, then as a consultant and product manager with Information Builders, Inc., FOCUS’ vendor. People were surprised, sometimes shocked, at how rapidly useful reports could be generated for them—once up to speed new reports could generally be available within a day, sometimes a couple of hours.

In today’s world of Big BI, where enterprise BI tools and technologies are the norm, business decision makers have become accustomed to long waits for their BI analytics. In a sense, Big BI has become the modern DP; the technology has become so large and complex that getting it installed and operational, with everything designed, implemented, cleansed, ETL’d, and so on before any analytics get created consumes all the time, energy, effort and resources available and results in failure and disappointment, with very little value delivered in the form of information making it into the minds of the real human people who need it.

Yet BI RAD lives. An entire modern generation of direct-access data discovery tools has emerged, providing the ability to establish an intimate connection between business data and the people who need insights into the data in order to make informed business decisions.

Tableau, from Tableau Software, is a near-perfect tool for BI RAD. It connects to the great majority of business data sources almost trivially easily, and is unsurpassed in terms of usability and quality of visualizations for the majority of business analytics needs.

Using Tableau, it’s possible to sit down with a business stakeholder, connect to their data, and jump right into exploring their data. Any interesting and valuable analyses can be saved and kept at hand for immediate use.

Experience in multiple client environments has shown that these analyses have multiple overlapping and reinforcing benefits to the organization. The immediate and obvious benefit is that business decision makers are provided the data based insights they require to make high quality business decisions. Beyond that, the analyses created are the best possible requirements for Enterprise analytics

Who could ask for anything more?

How Big BI Fails To Deliver Business Value

Business Intelligence, the noun, is the information examined by a business person for the purpose of understanding their business, and using as the basis of their business decision.

Business Intelligence, the verb, is the practice of providing the business person with the Business Intelligence they require.

Business Intelligence is conceptually based in a valuable proposition: delivery of actionable, timely, high quality information to business decision makers provides data-based evidence that they can use in making business decisions.

Timely, high quality information is extremely valuable. It’s also somewhat perishable. Deriving the maximum business value from Business Intelligence is the expressed value proposition of all BI projects. Or it should be.

Enterprise Business Intelligence is the entire complex of tools, technologies, infrastructure, data sources and sinks, designs, implementations and personnel involved in collecting business data, combining it into collective stores, and creating analyses for access by business people.

Enterprise BI projects are very strongly biased towards complexity. They’re based on the paradigm of using large complex products, platforms, and technologies to design and build out data management infrastructures that underpin the development of analytics—reports, dashboards, charts, graphs, etc., that are made available for consumption.

Big BI is what happens when Enterprise Business Intelligence grows bigger than is absolutely necessary to deliver the BI value proposition. Unfortunately, Enterprise BI tends to mutate into Big BI, and the nature of Big BI almost invariably impedes the realization of the BI business value proposition.

Big BI is by its very nature overly large, complex and complicated. There are many moving parts, complicated and expensive products, tools and technologies that need to be installed, configured, fed, and cared for. All before any information actually gets delivered to the business decision makers.

Big BI is today’s Data Processing. In the old days of mainframes, COBOL, batch jobs, terminals, and line printers business people who wanted reports had to make the supplicant journey to their Data Processing department and ask (or beg) for a report to get created, and scheduled, and delivered. Data Processing became synonymous with “we can do that (maybe) but it’ll take a really long time, if it happens at all.” Today’s Big BI is similarly slow-moving.

There are multiple reasons for this sad state of affairs. In the prevalent paradigm new BI programs need to acquire the requisite personnel, infrastructure, tools, and technologies. All of which need to be installed and operational, which can take a very long time. Data needs to be analyzed, information models need to be created, reporting data bases designed, ETL transformations designed and implemented, reporting tool semantic layers, e.g. Business Objects Universes, Cognos Frameworks, need to be constructed, reports need to be created, and, finally, the reports made available.

Only then do the business decision makers (remember them?) benefit.

This entire process can take a very long time. All too frequently it takes months. Not just because of the complexity of the tools and process, but also because of the friction inherent in coordinating the many moving parts and involved parties, each with their own bailiwick and gateways to protect.

The tragic part in many Big BI projects is that the reports delivered to the business people usually fall far short of delivering the business value they should provide.

The reports are out of date, or incomplete, or no longer relevant, or poorly designed and executed, or simply wrong because the report production team “did something” without having a clear and unambiguous understanding of the information needs of the business decision maker.

In far too many instances there’s a vast gulf between the business information needs and the reports that get developed and delivered. This situation occurs because there’s too much distance, in too many different dimensions, between the business and the report creators.

Here is a map of the structure—the processes and artifacts, and the linkages between them—of a typical Big BI project. It’s worth studying to see how far apart the two ends of the spectrum are. At one end is the business person, with their business data that they need to analyze. At the far end, at the end of all of the technology, separated by multiple barriers from the business, are the report generators.

A typical scenario in this environment is that somebody, with any luck a competent business analyst, sometimes a project manager, all too frequently a technical resource, is tasked with interviewing the business and writing up some report specs—perhaps some wireframes and/or Word documents, maybe Use Cases. The multiple problems with these means of capturing analytics requirements are too extensive to discuss here. One fatal flaw that occurs all too frequently must be mentioned, however: the creator of the report specs lacks the professional skills required to elicit, refine, and communicate appropriate, high quality, effective business analytics requirements. As a result the specifications are inadequate for their real purpose, and their flaws flow downstream.

These preliminary specs are then used as the inputs into the entire BI implementation project. Which then goes about its business of creating and implementing all of the technological infrastructure necessary to crank out some analyses of the data. At the end of the “real work” a tool specialist renders the analytics that then get passed back to the business.

The likelihood of this approach achieving anything near the real business value obtainable from the data is extremely low. There’s simply too much distance, and too many barriers, between the business decision makers and the analyses of their data. Big BI has become too big, too complex, with too much mass and inertia, all of which get between the business and the insights into their data that are essential to making high quality business decisions.

There is, however, a bright horizon.

Business Intelligence need not be Big BI. Even in those circumstances where Enterprise BI installations are required, and there are good reasons for them, they need not be the monolithic voracious all-consuming resource gobblers they’ve become. Done properly, Enterprise BI can be agile, nimble, and highly responsive to the ever-evolving business needs for information.

Future postings will explain how this can be your Enterprise BI reality.

Eleven Times Less Innumeracy

“Moving to an advanced format of 4K sectors means about eight times less wasted space but will allow drives to devote twice as much space per block to error correction.”

This bit of nonsensical numerical fluff can be found at: http://news.bbc.co.uk/2/hi/technology/8557144.stm

This “x times less” or “x times fewer” construction is becoming extremely annoyingly common.

A rational person instantly recognizes that there is no meaning to it, that a multiplication applied in a reduction context is intrinsically void of numerical content. In order for a multiplication operation to work there must be a specified quantity being multiplied. This popular emptiness lacks any such multiplied quantity—”less” is a perfectly nice and useful qualitative term, but it lacks the quantitative substance of a true multiplicand.

If the intent is to convey a measure of reduction, the proper construction is “x is {some fraction} of y”. Rewriting the bad BBC bit: “Moving to an advanced format of 4K sectors means about one-eighth as much space will be wasted but will allow drives to devote twice as much space per block to error correction.”

Of course, there’s some funkiness with the “but…” clause, but that’s another seven times less can or worms.

Commenting on Agile Enterprise Data Modeling

I’ve been practicing agile BI for over twenty years, and am very happy to see this article by Information Management Magazine’s Steve Hoberman: “Is Agile Enterprise Data Modeling an Oxymoron?”

The gist of the article is that agile practices have great value in enterprise data modeling, a sentiment I heartily agree with. This posting recaps my comment on Hoberman’s article.

Agile BI, which includes agile data modeling, deliberately set outs to deliver business value early, often and continuously in the form of meaningful, timely, high quality analytics. Delivering the information business decision makers require when they need it is the whole point of BI. Nothing else matters. Not building elegant data models. Not buying, installing and configuring hugely expensive enterprise BI software. Not building systems that can answer any question that might ever be asked.

Far too many BI initiatives fall into the trap of trying to deliver the universal analytical appliance as their first achievement. As the maxim puts it: “You can’t start with everything; if you try you’ll never deliver anything.”

While I agree with the major thrust of Hoberman’s article there are a couple of misinterpretations of agility that it perpetuates.

First up: “A majority of projects driven by agility, however, lack a big-picture focus and often strive to deliver small slices of functionality within tight time frames, at times redoing and revamping prior work.” While it seems reasonable, even wise, it’s naive and there are a couple of corrections required to this statement.

Redoing and revamping prior work is an essential ingredient element in any agile undertaking. In “normal” agile software development this is called refactoring and is a good thing. Agile BI is no different. Refactoring is always part of the process – agile projects surface and embrace it, make it part of the normal course of events; big-BI project hide it under the covers where it pollutes everything, adds tremendous friction, and invisibly hinders progress.

There’s a hint in the statement that agility projects bias is towards a “lack a big-picture focus”. (yes I know it’s qualified but the smell of allegation lingers). This is a misrepresentation of the true nature of agility. Agility requires a professional awareness of the large scale structures of the diverse elements in the mix. A project that doesn’t do this may wear the clothes of agility, but doesn’t possess its soul. Just throwing functional bits out into the world without regard for the rich complexity of the environment will inevitably lead to a terrible mess. An organization that creates such a mess is unlikely to get it cleaned up, and some poor schlub is usually left trying to continue to work with it while getting blamed for the inability to produce results.

There’s also an argument to be made against using a pre-existing framework to guide agile BI. While it’s incumbent upon BI professionals to recognize the conceptual architectures-business and technical-forming the environment, the paradigm that a pre-formed architecture is necessarily a valuable asset fails to recognize the costs, burdens, and downstream ill effects that result from the principle of simply trying to “fill in the details.” Experience shows that the best architectures are emergent, resulting from the continual refactoring of the developing systems while adhering to suitable and appropriate architectural principles. In this approach, there’s always an intentional architecture that fully supports the real needs of the system, is flexible and adaptable (agility at work!), and can be grown and refined as necessary while never imposing costs not directly related to delivering specific business value.

High Level Enterprise BI Project Activities Diagrams

This PDF document–Data Warehouse Typical Project–maps the normal set of high level activities involved in Enterprise BI projects.

Typical Enterprise BI projects are complex complicated affairs that have difficulty delivering Business Intelligence quickly due to multiple factors:

- There are many complex discrete interconnected activities which have stringent analytical interconnections and dependencies

- there is generally a lack of analytical expertise brought to bear on the data, meta-data, and meta-meta-data that needs to be transported and communicated between the various parties

- high quality requirements are extremely difficult to achieve, primarily because there’s an enormous amount of setup work that needs to occur before an Enterprise BI tool can be connected to real data and be used to develop preliminary analytics

- absent live reports, any end-user analytical requirements are usually low quality, low fidelity best guesses made in the vacuum of the real feedback essential for arriving at truly useful Business Intelligence

This PDF–Data Warehouse – Tableau Augmented Project Processes–highlights those areas where Tableau can be profitably employed to dramatically improve the velocity and quality of the Business Intelligence delivered to business decision makers, and in significantly streamlining the entire process stream by introducing the practices of data analysis into the Enterprise BI project activities.

In practice, this approach has been shown to provide business value early and often, and result in better Enterprise BI outcomes much sooner and at lower cost. Leaving more resources available to continuing to expand the scope, sophistication, velocity and quality of the Business Intelligence provided, and therefore providing a much higher business value delivery.

Common Problems Saving Tableau Packaged Workbooks

Tableau’s packaged workbooks are tremendously useful. Bundling data with the workbook allows anyone to peruse the data using the Workbook without having access to the original source data. I use them frequently in large BI projects as a way of providing Reports to end users, analyses of data all along the project process chain, even in providing the database schema to downstream technical teams when the “normal” processes take too long.

Packaged Workbooks can be opened with the Desktop Application or the Tableau Reader. Published to the Tableau Serer they’re available just like normal Workbooks.

Creating a Packaged Workbook is really pretty straightforward: create an extract of the data (for every data source used in the Workbook); save or export the Workbook in its packaged form.

There are a couple of reasonably common circumstances I’ve run into again recently; this post covers them.

Problem—SQL Parsing error creating the extract

I’ve seen this more than once: when Tableau tries to create an extract it fails with a fairly obscure error along the lines of “Data format string terminated prematurely”, which seems to indicate that there’s been a problem parsing a date value using whatever internal format it’s employing. There are no calculated fields or data calculations, so it’s really puzzling and Tableau doesn’t really provide any diagnostics.

There’s also the matter that this problem doesn’t surface until the extract is under preparation, implying that it’s not involved with any of the fields being referenced in the Worksheets, which leads us to the

Solution—Hide the unused fields and try to create the extract

Almost too easy, isn’t it? Hiding the unused fields also reduces the size of the extract, which in some cases makes a big difference. On the other hand, the unused fields aren’t available for use in the extract, and therefore in the Packaged Workbook; this isn’t a problem for Tableau Reader users, but limits those Desktop Application and Server users who otherwise could extend the Workbook’s analytics.

Problem—creating the Packaged Workbook generates an “unconnectable data source” message

[insert message here]

Solution—find and close any Data Connections that aren’t being used

Orphaned Data Connections can have a number of causes, but usually because the last Worksheet using the Data Connection gets deleted or pointed to another Data Connection.

Finding and closing unused Data Connections from within the Workbook can be a bit of a hunting expedition–this will be the topic of another post. But very soon the Tableau Inventory will identify orphaned Data Connections.